人工智能TensorFlow(十二)Overfitting与dropout代码实现

上期我们分享了TensorFlow的过拟合与dropout的问题过拟合(Overfitting)与dropout,本期我们来实现如何在TensorFlow中利用代码实现overfiting的问题。

overfiting问题

我们导入sk-learn的数据,来进行本期的训练分析

import tensorflow as tf from sklearn.datasets import load_digits from sklearn.cross_validation import train_test_split from sklearn.preprocessing import LabelBinarizer

初始化数据,定义一个测试数据与一个训练数据

digits = load_digits() X = digits.data y = digits.target y = LabelBinarizer().fit_transform(y) X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

定义层,这些是往期的代码

def add_layer(inputs, in_size, out_size, layer_name, activation_function=None): Weights = tf.Variable(tf.random_normal([in_size, out_size])) biases = tf.Variable(tf.zeros([1, out_size]) + 0.1) Wx_plus_b = tf.matmul(inputs, Weights) + biases Wx_plus_b = tf.nn.dropout(Wx_plus_b, keep_prob) if activation_function is None: outputs = Wx_plus_b else: outputs = activation_function(Wx_plus_b) tf.summary.histogram(layer_name + '/outputs', outputs) return outputs

定义 X Y keep_Porb(drop掉的系数)

xs = tf.placeholder(tf.float32, [None, 64]) # 8*8 ys = tf.placeholder(tf.float32, [None, 10]) keep_prob = tf.placeholder(tf.float32)

添加层,定义loss ,初始化函数

# 添加层

lay_one = add_layer(xs, 64, 50, 'lay_one', activation_function=tf.nn.tanh) # 输入层

prediction = add_layer(lay_one, 50, 10, 'lay_two', activation_function=tf.nn.softmax) # 输出层

# loss

cross_entropy = tf.reduce_mean(-tf.reduce_sum(ys * tf.log(prediction), reduction_indices=[1]))

tf.summary.scalar('loss', cross_entropy)

train_step = tf.train.GradientDescentOptimizer(0.6).minimize(cross_entropy)

# 初始化

init = tf.global_variables_initializer()

merged = tf.summary.merge_all()

训练TensorFlow,我们搜集一下训练的数据与测试 的数据

with tf.Session() as sess:

# summary writer

train_writer = tf.summary.FileWriter("logs/train", sess.graph)

test_writer = tf.summary.FileWriter("logs/test", sess.graph)

sess.run(init)

for i in range(500):

sess.run(train_step, feed_dict={xs: X_train, ys: y_train, keep_prob: 1})

#keep_prob 系数:1是无drop,我们可以使用不同的系数,来对比overfiting的效果

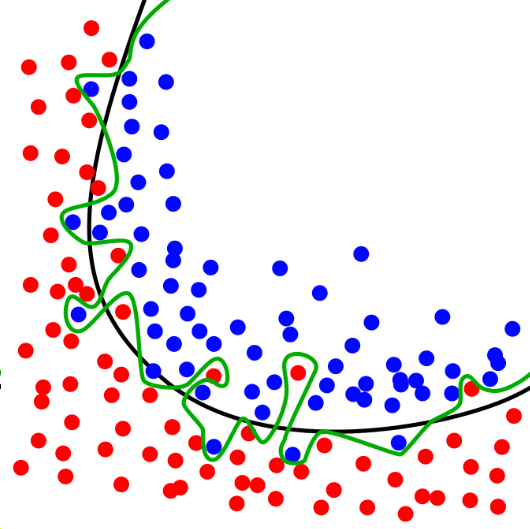

#本期小编使用1 与0.5 的系数对比,通过如下图片可以看出无drop时,2个数据的loss存在明显的差别,当使用0.5的drop时,2个数据的loss几乎完全吻合

if i % 50 == 0:

train_result = sess.run(merged, feed_dict={xs: X_train, ys: y_train, keep_prob: 1})

test_result = sess.run(merged, feed_dict={xs: X_test, ys: y_test, keep_prob: 1})

train_writer.add_summary(train_result, i)

test_writer.add_summary(test_result, i)

存在overfiting

无overfiting

如上很多代码都是往期文章分享过的代码,若有网友不清楚代码意义的,可以看小编往期的文章哟。

下期分享:

关于TensorFlow的基础知识,我们就分享到这里,从下期开始我们开始使用TensorFlow,来分享一下CNN的基本知识与应用。

谢谢大家的点赞与转发,关于分享的文章,大家有任何问题,可以在评论区一起探讨学习!!!